Year: 2023

Duration: 3 months

Project Type: Virtual Reality Experience, Ambisonic - Spatial Audio | Graduate Student Project

Project Overview

An underwater spatial audio experience for XR is designed to transport users to the ocean's depths, surrounded by the rich, diverse soundscape of marine life.

Introduction

I was introduced to something that blew my mind 🔊 Spatial Audio using Ambisonic systems. Imagine being thrown into a swimming pool before you even know how to float, that’s exactly how I felt when I began learning this alongside Unreal Engine 5. I was flapping around, barely staying afloat, but oh, was it thrilling!

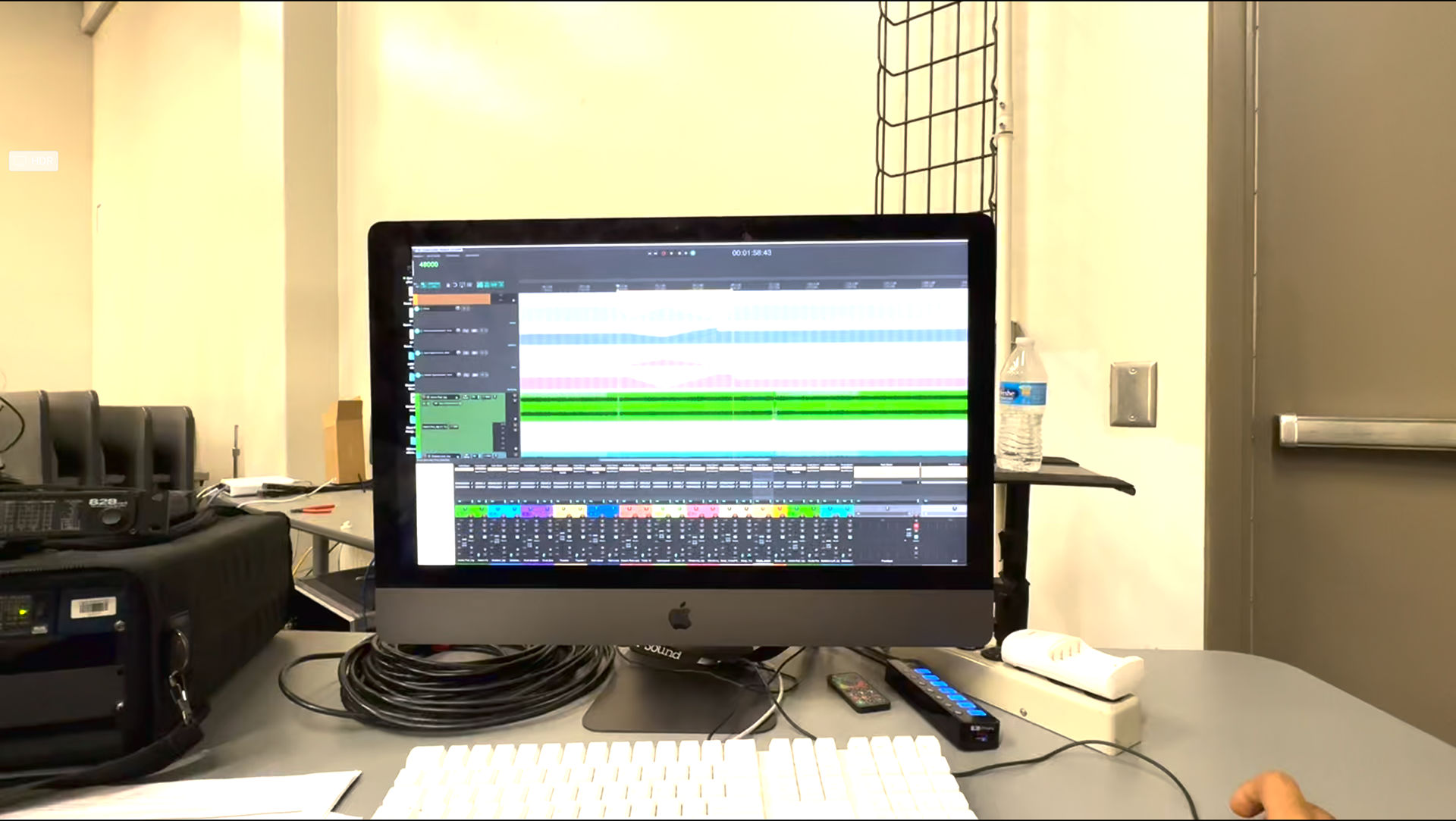

A snap of the spatial Audio digital work station.

One of my friend came to experience my project.

Hand mesh linked with the oculus 2 controllers

The Audio Dome

Screenshot of the underworld - unreal engine

This is where the entire experience is build and tested.

The Process

The creative process for this project begins with research into the underwater world, focusing on the sounds and textures of the environment. I also consulted with my friend who is a certified scuba diver to gain a deeper understanding of the underwater ecosystem. He shared some real photos of underwater which I used for reference while creating the visuals in unreal engine.

Creating the soundscape was a journey in itself. I recorded real-world sounds, manipulated them, and even synthesized new ones. Using spatial audio techniques like distance, azimuth, and elevation, I layered each element to create depth and dimension. I wanted users to feel the weight of the water around them, hear the distant calls of whales, and sense the bubbles rising past them.

User Experience

I intend for the user to feel like they are truly underwater when they experience the project. The spatial audio will create a sense of immersion, while the interactive elements will allow them to explore and interact with their surroundings. This project will leave the user with a greater appreciation for the underwater world.

In addition to the soundscape, I also created interactive elements that allows the user to explore and interact with their surroundings. This could include things like interactive bubbles and trigger a shark animation.

Technologies & Hardware

Softwares used for this project –

- Unreal Engine 5

- Reaper (DAW)

- Genesis Speakers 36 + 6 LFE

- Dante Audio

- Spat Revolution

- Max MSP

This journey wasn’t just about learning tools—it was about discovering how Spatial Audio is revolutionizing the soundscape of film, music, VR experiences, and entertainment. I dived into DAWs like #ProTools, #LogicProX, and #Reaper (open source), alongside cutting-edge tools like #SpatRevolution and #MaxMSP. Connecting these with Unreal Engine 5 via OSC messages was like watching different worlds collide to create something extraordinary.

Final Rendering from VR experience

Preview as seen on the VR headset.

Thanks to the incredible guidance of Prof. Garth Paine and Prof. Nicholas Pilarski, along with PhD student Frank Liu, PhD, I started to find my rhythm. They showed me how to record, process, and integrate ambisonics audio with Unreal Engine 5—a beast of a game engine. It felt like trying to tame two gigantic dragons simultaneously, but the challenge made it all the more exciting.

Some nights, I stayed at the center until the clock struck midnight, losing track of time in the awe-inspiring audio dome. Other times, I left at sunrise, exhausted but exhilarated. Picture a 36-speaker + 6 LFE setup surrounding you, creating a soundscape that feels alive. It was pure magic.